Ray Wenderlich [iOS & iPhone Development]

It turns out that integrating natural language processing into an app is quite a tricky problem to solve. You can’t just take whatever text Siri has decoded from the user’s speech, pass it as a string to the app and presto — you’re done! Well, you could, but imagine the number of possible ways your users around the world could talk to your app. Would you really want to write that code?

Think about the times you’ve used Siri. There’s usually a little conversation that happens between you and Siri; sometimes that conversation goes well, and sometimes it doesn’t. Either way, there’s a lot of first-party support work happening behind the scenes.

Before you start this SiriKit tutorial, some warnings: if you’ve ever been frustrated with Siri, how would you feel having to use Siri for every build and run? Then imagine that debugging was incredibly hard because you’re running in an app extension, and because Siri times out if you pause the debugger for too long. Also, imagine you have to build using a device, because Siri isn’t available on the simulator.

If that hasn’t scared you off, then:

“It’s time to get started.”

I’m not sure I understand.

“Start the tutorial.”

OK, here’s what I found on the web:

I’m just getting you warmed up. You’ll be seeing that sort of thing a lot.

Getting Started

SiriKit works using a set of domains, which represent related areas of functionality, such as Messaging.

Within each domain is a set of intents, which represent the specific tasks that the user can achieve using Siri. For example, within the Messaging domain, there are intents for sending a message, searching for messages and setting attributes on a message.

Each intent is represented by an

In Intent subclass, and has associated with it a handler protocol and a specific Interventionist subclass for you to talk back to SiriKit.

Language processing in your app boils down to SiriKit deciding which intent and app the user is asking for, and your code checking that what the user is asking makes sense or can be done, and then doing it.

Would You Like to Ride in my Beautiful Balloon?

First, download the starter sample project here. The sample project for this SiriKit tutorial is WenderLoon, a ride-booking app like no other. The members of the Razeware team are floating above London in hot air balloons, waiting to (eventually) pick up passengers and take them to… well, wherever the wind is blowing. It’s not the most practical way to get around, but the journey is very relaxing. Unless Mic is driving. :]

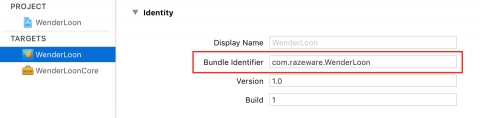

Open up the sample project. Before you can start, you’ll need to amend the bundle identifier of the project so that Xcode can sort out your provisioning profiles. Using Siri needs entitlements, and you need to run it on a device, which means you need your own bundle ID.

Select the Wonderland project in the project navigator, then select the Wonderland target. Change the Bundle identifier from

com.razeware.WenderLoon to something unique; I’d suggest replacing raze ware with something random.

In the Signing section choose a development team.

Select the WenderLoonCore framework target and change the bundle identifier and select a development team there as well.

Connect a device running iOS 10 and build and run to confirm that everything is working:

You’ll see some balloons drifting somewhere over London. The app doesn’t do very much else — in fact, you’ll be doing the rest of your work in an extension.

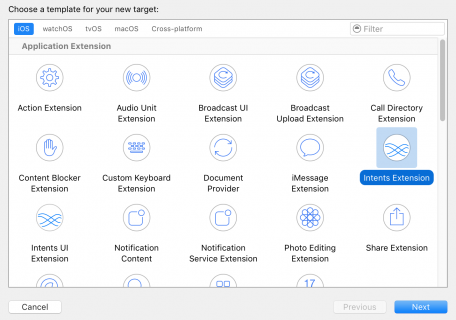

Add a new target using the plus button at the bottom of the target list, or by choosing File\New\Target….

Choose the iOS/Application Extension/Intents Extension template.

On the next screen, enter RideRequestExtension for the product name. Don’t check the Include UI Extension box. If you’re prompted to activate a new scheme, say yes.

![Image result for Ray Wenderlich [iOS & iPhone Development]](https://koenig-media.raywenderlich.com/uploads/2017/02/SiriKit-feature.png)